Abstract

Self-training crowd counting has not been attentively

explored though it is one of the important challenges in computer vision. In

practice, the fully supervised methods usually require an intensive resource

of manual annotation. In order to address this challenge, this work

introduces a new approach to utilize existing datasets with ground truth to

produce more robust predictions on unlabeled datasets, named domain

adaptation, in crowd counting. While the network is trained with labeled

data, samples without labels from the target domain are also added to the

training process. In this process, the entropy map is computed and minimized

in addition to the adversarial training process designed in parallel.

Experiments on Shanghaitech, UCF_CC_50, and UCF-QNRF datasets prove a more generalized improvement of our method over the other state-of-the-arts in the cross-domain setting.

Experiments on Shanghaitech, UCF_CC_50, and UCF-QNRF datasets prove a more generalized improvement of our method over the other state-of-the-arts in the cross-domain setting.

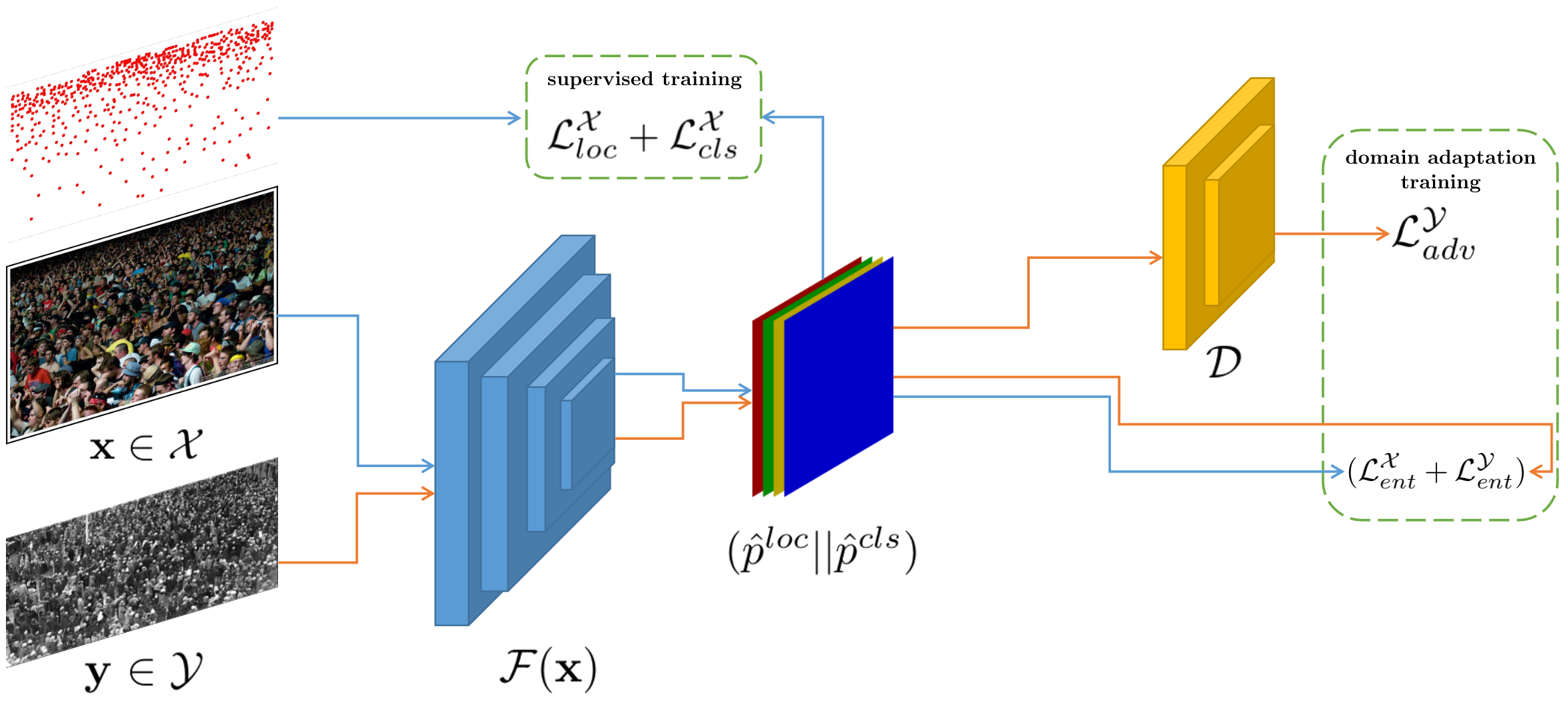

Overall framework

Given an image sample, the

deep network first extracts feature, then estimates location offset map and

classification map. With source domain sample, since label is available,

supervised L2 Distance loss and Cross Entropy loss can be effortlessly

calculated and they are used to guide the network. On the other hand, since

sample on target domain does not have label, adaptive domain loss functions

are emloyed to additionally teach the domain adaptation learning process.

Blue arrows indicate source sample's learning flow, while orange arrows

indicate the learning flow of target sample.

Result video

Publication

Self-supervised

domain adaptation in crowd counting

Pha Nguyen, Thanh-Dat Truong,

Miaoqing Huang, Yi Liang, Ngan Le, and Khoa Luu

2022 IEEE International

Conference on Image Processing (ICIP), 2022.

@inproceedings{nguyen2022selfsupervised,

author = {Nguyen, Pha and Truong, Thanh-Dat and Huang, Miaoqing and Liang, Yi and Le, Ngan and Luu, Khoa},

title = {Self-supervised Domain Adaptation in Crowd Counting},

booktitle = {2022 IEEE International Conference on Image Processing (ICIP)},

year = {2022}

}